The Growing Presence of Artificial Intelligence

Artificial Intelligence (AI) is increasingly shaping our lives, from autonomous vehicles on the road to AI algorithms driving decisions in healthcare, finance, and social media. AI’s ability to analyze vast amounts of data and make predictions is changing industries and making many processes more efficient. However, as AI becomes more ingrained in society, it raises significant ethical questions about the implications of machines making decisions traditionally reserved for humans.

From AI systems that recommend products to those involved in life-or-death decisions in healthcare, the question arises: Should we be worried about machines making these decisions? The answer is complex, as the integration of AI into everyday life presents both potential benefits and serious ethical concerns. Understanding the potential risks and benefits is crucial as we continue to develop and deploy AI systems.

The Role of AI in Decision Making

AI is already making decisions in various sectors, often in ways that are not immediately visible to the public. In healthcare, AI algorithms are being used to diagnose diseases, recommend treatments, and even predict patient outcomes based on data. In the legal system, predictive algorithms help determine bail and sentencing recommendations. In finance, algorithms drive high-frequency trading and manage investment portfolios.

These systems operate based on algorithms, which are essentially sets of instructions created by human programmers. They analyze patterns in large datasets to make decisions. However, the main concern arises from the fact that AI systems may not always be transparent in their decision-making processes, even though they influence critical aspects of our lives.

Bias in AI: The Danger of Prejudiced Decision Making

One of the most pressing concerns with AI decision-making is bias. AI systems are only as unbiased as the data they are trained on. If the data used to train an AI system contains biases—whether racial, gender-based, or socio-economic—there is a significant risk that the machine will perpetuate and even amplify these biases. In fact, AI systems have already been shown to exhibit biases that reflect those present in the data used to develop them.

For example, facial recognition software has been found to be less accurate in identifying people of color, particularly Black individuals, due to a lack of diverse data used to train the system. Similarly, predictive algorithms used in the criminal justice system have been criticized for reinforcing existing biases in arrest and sentencing patterns, disproportionately affecting minority communities.

These biases raise critical ethical questions: Who is responsible when an AI system makes a biased decision? How can we ensure that AI systems promote fairness and equality? The danger lies in the fact that AI can make decisions at a scale and speed that humans cannot easily oversee, leading to potentially widespread consequences before issues are detected and addressed.

The Transparency Problem: Can We Trust AI?

Another major ethical concern surrounding AI decision-making is transparency. AI systems, particularly those based on deep learning techniques, are often referred to as “black boxes” because their internal decision-making processes are not easily understood, even by their creators. This lack of transparency can make it difficult for individuals to understand why a particular decision was made by an AI system, especially when the stakes are high.

For example, in the healthcare industry, if an AI system recommends a particular course of treatment for a patient, the patient may have no way of understanding how that decision was reached. Was it based on a comprehensive analysis of the patient’s medical history, or was the system biased toward certain treatments due to skewed data?

In many cases, AI systems are used in critical areas where the lack of transparency could lead to harm. If an AI system denies someone a loan or job based on unclear criteria, or if an algorithm determines that an individual is unfit for a particular treatment, the inability to understand the reasoning behind these decisions raises significant ethical concerns. It also questions whether we can trust machines to make decisions that directly affect human lives.

Accountability: Who is Responsible for AI’s Actions?

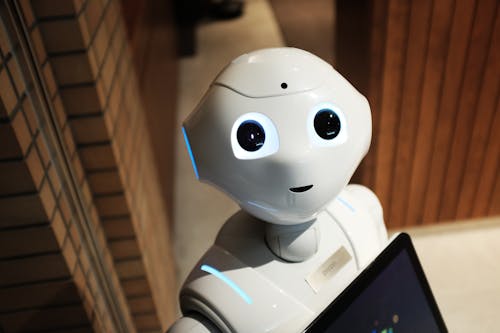

When an AI system makes a decision that causes harm or leads to unintended consequences, who is responsible? This is one of the most difficult ethical questions regarding AI. In many cases, AI systems operate autonomously, without direct human intervention. When something goes wrong, it can be challenging to pinpoint who should be held accountable: the developers who created the system, the companies that deployed it, or the machine itself?

This issue of accountability is especially problematic in high-risk sectors such as autonomous driving. If an autonomous vehicle makes a mistake and causes an accident, who is liable? The car manufacturer, the software developers, or the owner of the vehicle? As AI systems become more autonomous, the traditional concepts of liability and responsibility may no longer apply, creating legal and ethical challenges.

AI and the Future of Employment: Automation vs. Human Jobs

AI’s ability to perform tasks traditionally done by humans has sparked concerns about job displacement. From factory workers replaced by robots to self-checkout kiosks replacing cashiers, automation driven by AI is rapidly changing the workforce. While AI can lead to greater efficiency and productivity, it also raises questions about the ethical implications of replacing human workers with machines.

What happens to the workers who are displaced by AI? Are they given the training and resources needed to transition to new roles? There is a moral responsibility for society to address the impacts of automation on jobs, ensuring that workers are not left behind in an increasingly automated world.

Furthermore, the expansion of AI-driven automation may create a divide between those who benefit from these technological advancements—such as those with the skills to work in AI-related fields—and those who face job loss and economic hardship. The ethical question here is how we can ensure that AI-driven progress does not exacerbate existing social inequalities.

The Role of Ethics in AI Development

Given the ethical challenges associated with AI, many experts argue that the development and deployment of AI systems must be guided by strong ethical frameworks. These frameworks should address issues such as fairness, transparency, accountability, and privacy, and ensure that AI is used to enhance human well-being rather than undermine it.

Ethical guidelines can help developers and companies make decisions about how AI systems should be built, trained, and implemented. For example, efforts to eliminate bias in AI may involve creating more diverse datasets or implementing regular audits to ensure fairness. Transparency efforts might focus on developing AI systems that explain their decision-making processes in a way that is understandable to users.

Governments, regulators, and independent organizations have a critical role to play in establishing policies that ensure AI is developed and used ethically. By creating clear standards for accountability and ethical AI usage, society can better navigate the complexities of AI technology while protecting individual rights and freedoms.

Should We Be Worried?

As AI continues to evolve and play a larger role in decision-making, the ethical challenges it poses cannot be ignored. Concerns about bias, transparency, accountability, and the displacement of human workers are significant and must be addressed. While AI has the potential to revolutionize industries and improve lives, its ethical implications require careful consideration.

Ultimately, the answer to whether we should be worried about machines making decisions depends on how we, as a society, choose to develop, regulate, and use AI. If done responsibly, with a strong ethical framework in place, AI can be a force for good, helping to solve complex problems and improve human life. However, without careful oversight, it risks perpetuating biases, eroding privacy, and making decisions that are beyond our control.

As we move forward, it is essential that we continue to ask these tough ethical questions, ensuring that the machines we build are used to benefit all of humanity, not just a select few. Only through thoughtful, ethical development and regulation can we fully harness the potential of AI while mitigating the risks it poses.